By Kai Gray, VP of Operations at Carbonite

I feel like tectonic plates are shifting beneath the IT world. I’ve been struggling to put my finger on what it is that is making me feel this way, but slowly things have started to come into focus. These are my thoughts on how cloud computing has forever changed the economics of IT by shifting the balance of power.

The cloud has fundamentally changed business models; it has shifted time-to-market, entry points and who can do what. These byproducts of massive elasticity are wrapped up in an even greater evolutionary change that is occurring right now: The cloud is having a pronounced impact on the supply chain, which will amount to a tidal wave of changes in the near-term that will cause huge pain for some and spawn incredible innovation and wealth for others. As I see it, the cloud has started a chain of events that will change our industry forever:

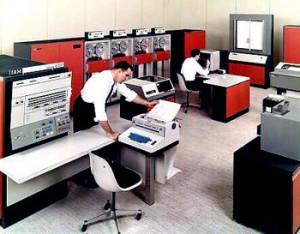

1) Big IT used to rule the datacenter. Not long ago, large infrastructure companies were at the heart of IT. The EMCs, Dells, Ciscos, HPs and IBMs were responsible for designing, sourcing, supplying and configuring the hardware that was behind nearly all of the computing and storage power in the world. Every server closest was packed full of name-brand equipment and the datacenter was no different. A quick tour of any datacenter would – and still will – showcase the wares of these behemoths of the IT world. These companies developed sophisticated supply and sales channels that produced great margins businesses built on some very good product. This included the OEMs and ODMs that produced bent metal to the VARs and distributors who then sold their finish products. Think of DeBeers, the diamond mine owner and distributor. What are the differences between a company like HP and DeBeers? Not very much, but the cloud began to change all that.

2) Cloud Computing. Slowly we got introduced to the notion of cloud computing. We started using products that put the resource away from us, and (slowly) we became comfortable with not needing to touch the hardware. Our email “lived” somewhere else, our backups “lived” somewhere else and our computing cycles “lived” somewhere else. With each incremental step, our comfort levels rose until it stopped being a question and turned into an expectation. This process set off a dramatic shift in supply chain economics.

3) Supply Chain Economics. The confluence of massive demand coupled with near-free products (driven by a need to expand customer acquisition) changed how people had to think about infrastructure. All of a sudden, cloud providers had to think about infrastructure in terms of true scalability. This meant acquiring and managing massive amounts of infrastructure at the lowest possible cost. This was/is fundamentally different from the way the HPs and Dells and Ciscos thought about the world. All of a sudden, those providers were unable to address the needs of this new market in an effective way. This isn’t to say that the big IT companies can’t, just that it’s hard for them. It’s hard to accept shrinking margin and “openness.” The people brave enough to promote such wild ideas are branded as heretics and accused of rocking the boat (even as the boat is sinking). Eventually the economic and scale requirements forced cloud providers to tackle the supply chain and go direct.

4) Going Direct. As cloud providers begin to develop strong supply chain relationships and build up their competencies around hardware engineering and logistics, they begin to become more ingrained with the ODMs (http://en.wikipedia.org/wiki/Original_design_manufacturer) and other primary suppliers. Huge initiatives came into existence from the likes of Amazon, Google and Facebook that are focused on driving down the cost of everything. For example, Google began working directly with Intel and AMD to develop custom chipsets that allow them to run at efficiency levels never before seen, and Facebook started the Open Compute Project that seeks to open-source design schematics that were once locked in vaults.

In short, the supply chain envelope gets pushed by anyone focused on cost and large-scale.

…and here it gets interesting.

Cloud providers now account for more supplier revenue than the Big IT companies. Or, maybe better stated — cloud providers account for more hope of revenue (HoR) than Big IT. So, what does that mean? That means that the Big IT companies no longer receive the biggest discounts available from the suppliers. The biggest discounts are going to the end users and the low-margin companies built solely on servicing the infrastructure needs of cloud providers. This means that Big IT is at even more of a competitive disadvantage than they already were. The cycle is now in full swing. If you think this isn’t what is happening, just look at HP and Dell right now. They don’t know how to interact with a huge set of end users without caving in their margins and cannibalizing their existing businesses. Some will choose to amputate while others will go down kicking, but margin declines and openness of information will take their toll with excruciating pain.

What comes of all this? I don’t know. But here are my observations:

1) Access to the commodity providers (ODMs and suppliers) is relatively closed. To be at all interesting to ODMs and suppliers you have to be doing things at enough volume that it is worthwhile for them to engage with you. That will change. The commodity suppliers will learn how to work in different markets but there will be huge opportunity for companies that help them get there. When access to ODMs and direct suppliers gets opened up to traditional Enterprise companies so they can truly and easily take advantage of commodity hardware through direct access to suppliers then, as they say, goodnight.

2) Companies that provide some basic interfaces between the suppliers and the small(er) consumers will do extremely well. For me, this means configuration management of some sort, but it could be anything that helps accelerate the linkage between supplier and end-user . The day will come when small IT shops have direct access to suppliers and are able to custom-build hardware in same way that huge cloud providers do today. Some might argue that there is no need for small shops to do this — that they can use other cloud providers, that it’s too time consuming to do it on their own, and that their needs are not unique enough to support such a relationship. Yes, yes, and yes… for right now. Make it easy for companies to realize the cost and management efficiencies of direct supplier access and I don’t know of anyone that wouldn’t take you up on that. Maybe this is the evolution of the “private cloud” concept but all I know is that, right now, the “private cloud” talk is being dominated by the Big IT folks so the conflict of interest is too great.

3) It’s all about the network. I don’t think the network is being addressed in the same way as other infrastructure components. I almost never hear about commodity “networks,” yet I constantly hear about commodity “hardware.” I’m not sure why. Maybe Cisco and Juniper and the other network providers are good at deflecting or maybe it’s too hard of a problem to be solved or maybe the cost isn’t a focal point (yet). Whatever the reason, I think this is a huge problem/opportunity. Without the network, everything else can just go away. Period. The entire conversation driving commodity-whatever is predicated around delivering lots of data to people at very low-cost. The same rules that drive commoditization need to be applied to the network and right now I only know of 1 or 2 huge companies that are even thinking in these terms.

There are always multiple themes in play at any given time that, when looking back, we summarize as change. People say that the Internet changed everything. And, before that, the PC changed everything. What we’re actually describing is a series of changes that happened over a period of time that have the cumulative effect of making us say, “How did we ever do X without Y?” I believe that the commoditization of infrastructure is just one theme among the change that will be described as Cloud Computing. I contend, however, the day is almost upon us when everybody, from giant companies to the SMB, will say, “Why did we ever buy anything but custom hardware directly from the manufacturer?”

This post originally appeared on kaigray.com. It does not necessarily reflect the views or opinions of GreenPages Technology Solutions.

To Learn more about GreenPages Cloud Computing Practice click here.

Nokia’s Data Center Services division has unveiled plans to launch mobile telcos into the cloud. Plans include a custom-made a multivendor infrastructure to support its transformation consulting services.

Nokia’s Data Center Services division has unveiled plans to launch mobile telcos into the cloud. Plans include a custom-made a multivendor infrastructure to support its transformation consulting services.