Picture credit: Michael Heiss/Flickr

Here’s the challenge. Your CEO won’t accept anything less than a world-class IT infrastructure that meets the expectations of his most informed Line-of-Business leaders. If you’re the CIO and you don’t want to rely upon public cloud service providers, then are you ready to deliver the caliber of IT services that they can provision?

Take a moment. Consider the implications. Ponder the impact of your actions.

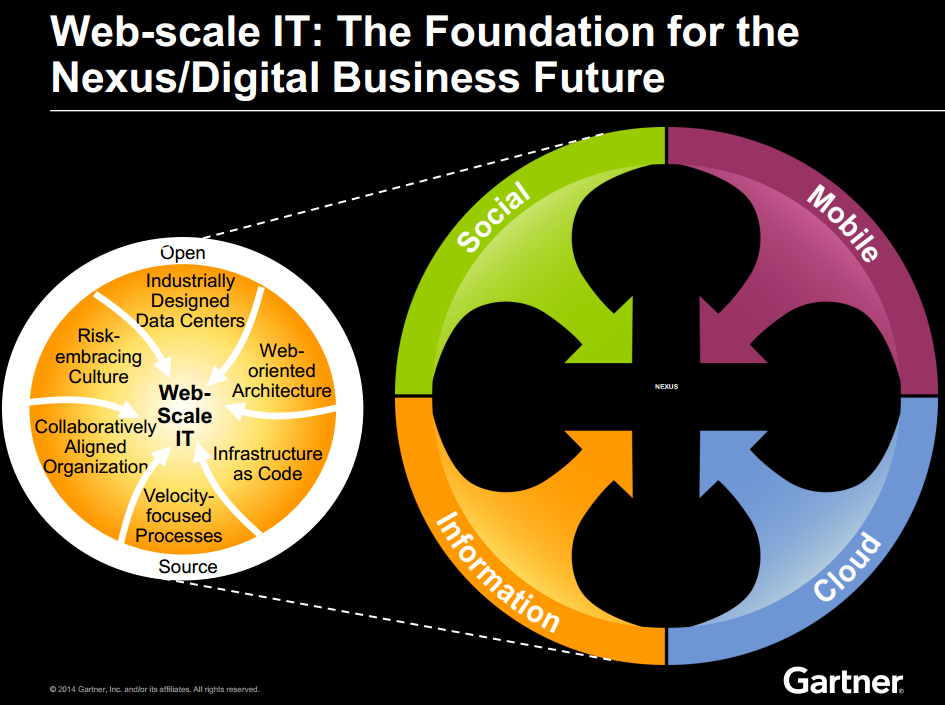

All the leading public cloud services providers routinely design and assemble their own data center infrastructure components, due to their extreme needs for scale and cost control. Regardless of the cloud services company, a common element among all these devices is a requirement to run an open-source operating system, such as Linux, and various other purpose-built open-source software components. Gartner, Inc. refers to this trend as the Web-scale IT phenomena.

Why you need to adopt Web-scale IT practices

What is Web-scale IT? It’s all of the things happening at large cloud services firms – such as Google, Amazon and Facebook – that enables them to achieve extreme levels of compute and storage delivery. The methodology includes industrial data centre components, web-oriented architectures, programmable management, agile processes, a collaborative organization style and a learning culture.

By 2017, Web-scale IT will be an architectural approach found operating in 50 percent of all global enterprises – that’s up from less than 10 percent in 2013, according to Gartner. However, they also predict that most corporate IT organizations have a significant expertise shortfall that creates a huge demand for long-term technical staff training and near-term consulting guidance.

Gartner reports that legacy capacity planning and performance management skills within typical enterprise IT teams are no longer sufficient to meet today’s rapidly evolving large multinational business. By 2016, according to Gartner’s assessment, the lack of required skills will be a major constraint to growth for 80 percent of all large companies.

Gartner also believes that Web-scale IT organizations are very different than Conventional IT teams – in particular, they proactively learn from one another. Furthermore, Web-scale organizations extend the data center virtualization concept by architecting applications to be stateless, wherever possible.

“While major organizations continue to maintain and sustain their conventional capacity-planning skills and tools, they need to regularly re-evaluate the tools available and develop the capacity and performance management skills present in the Web-scale IT community,” said Ian Head, research director at Gartner.

Hybrid cloud computing services constructed in this way are better equipped to scale geographically and share multiple data centers with limited impact on user performance. This approach also blurs the lines between capacity planning, fault-tolerant designs, and disaster recovery.

When to plan for large-scale web systems

Demand shaping uses various techniques to adjust the quantity of resources required by any one service so that the infrastructure does not become overloaded. Gartner predicts that through 2017, 25 percent of large organizations will use demand shaping to plan and manage capacity – that’s up from less than 1 percent in 2014. So, you’ll need a plan of action – to take you from your current scale, to a Web-scale.

Gartner says that CIOs and other IT leaders must plan both the application and infrastructure architecture carefully. Infrastructure and product teams must work together to use application functionality, which allows an orderly degradation of service by reducing non-essential features and functions (when necessary).

Besides, the different architectures and the vastness of Web-scale IT organizations make traditional capacity planning tools of limited utility. In-memory computing and deep analytics tools are generally used to extract the required data directly from the infrastructure and from reporting capabilities built into software applications. This information is used to inform real-time decisions to allocate resources and manage potential bottlenecks.

“These operational skills and tools are currently unique to each Web-scale organization and are not yet available in most end-user organizations,” said Mr. Head. “However they will be in increasingly high demand as large organizations of all types begin to pursue the tangible business benefits of a Web-scale approach to IT infrastructure.”

Getting ready to scale-out your infrastructure

So, if your IT organization isn’t prepared for this transition, what are your options? Your search for hybrid cloud training services and consulting guidance should start with a requirement for proven OpenStack expertise. As the leading open-source cloud Infrastructure-as-a-Service platform, you’re likely going to need IT talent that’s already experienced with prior OpenStack deployments.

That said, choose wisely. Keep in mind; few suppliers will have Web-scale infrastructure experience. As you prepare your list of qualified vendors, ask for customer case study examples and their use case scenarios. To help reduce the risk of procurement remorse, take all the time you need to perform due diligence.

Regardless of your IT operational budget, familiarize yourself with the OpenStack trailblazers, such as eNovance, and become versed in the language and processes of the Web-scale infrastructure deployment pioneers. Now you’ll be ready to embark upon your scale-out infrastructure journey.